Beyond the Numbers with Strength-IQ

Available in:

EN

With experience across the NBA, NBL, NRL and Olympic programs, strength and conditioning coach Nathan Spencer has developed a performance approach that leverages technology to challenge traditional training practices.

Through his company, Strength-IQ, he now focuses on the meaningful application of objective measurement technology, helping practitioners navigate the landscape of modern performance training.

What shaped your current approach to strength and conditioning?

My experience in high-performance environments has reinforced the idea that technology should support practice, not dictate it. In elite sporting organizations, every method and philosophy needs to be justified. This justification often relies on advanced technology, but more data doesn’t necessarily lead to better outcomes.

High-performance testing technologies, such as ForceDecks, are available in sporting organizations worldwide. While many practitioners often use the same (or similar) testing variations, it’s the environment and systems they set in place that ultimately shape the technology’s impact.

…it’s the environment and systems [practitioners] set in place that ultimately shape the technology’s impact.

Information is abundant in high-performance sport, but the ability to interpret and use it effectively is limited. Today’s practitioners are expected to make sense of the data they gather and apply it effectively, rather than look for the “best test” to use in a specific setting.

What do practitioners miss when collecting data?

I regularly work with elite organizations to improve how they collect and interpret performance data, and I’ve found that many would benefit from implementing systems that enable deeper analysis. Not every practitioner needs to be a data scientist, but rather, have a basic appreciation for the information that the technology actually provides.

This begins with quality test selection. Rather than creating a “catch-all” test that is perfectly representative of the sport, I focus on tests that capture the sport-specific qualities that are meaningful for performance coaches to track. By breaking tasks down into their components, we can better conceptualize what is required for the assessments to quantify the qualities of interest.

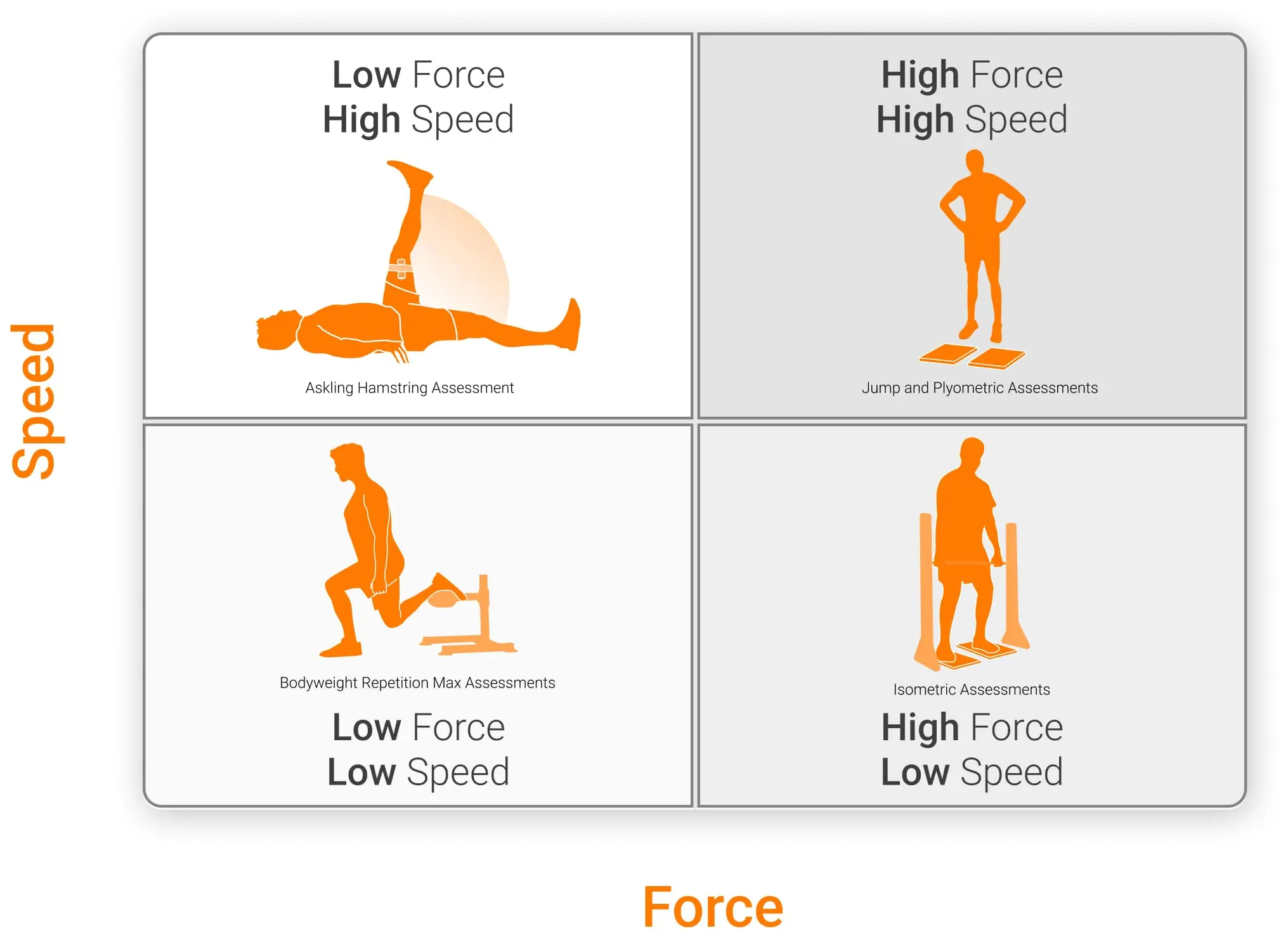

I use the following framework to compare assessments based on their force and speed of execution:

Teams sometimes get into the habit of running a test because it’s deemed important as an industry standard screening, rather than working backwards from an adaptation-led approach. Matching desired adaptations to corresponding tests can help answer questions more effectively while also cutting down on time investments. Efficiency is even more critical in the context of team sport environments.

Matching desired adaptations to corresponding tests can help answer questions more effectively while also cutting down on time investments.

How does this approach simplify metric selection?

When educating different organizations, I often instruct practitioners to group metrics by their purpose. Output-driven metrics (e.g., jump height or peak power during jump tests such as the countermovement jump [CMJ]) indicate what the athlete produced, while strategy-based metrics (e.g., countermovement depth or contraction time) tell me how they produced it. That simple distinction helps filter and organize data, providing more meaningful insight.

For example, a high-force, high-speed test, such as the drop jump, can be used to track simple output metrics like peak power relative / body mass or jump height, helping practitioners better understand athlete performance over time. Similarly, if we track a strategy metric, such as contact time, we can better monitor changes in an athlete’s preferred movement strategy over time, which ultimately influences how we program exercises like plyometrics in the future.

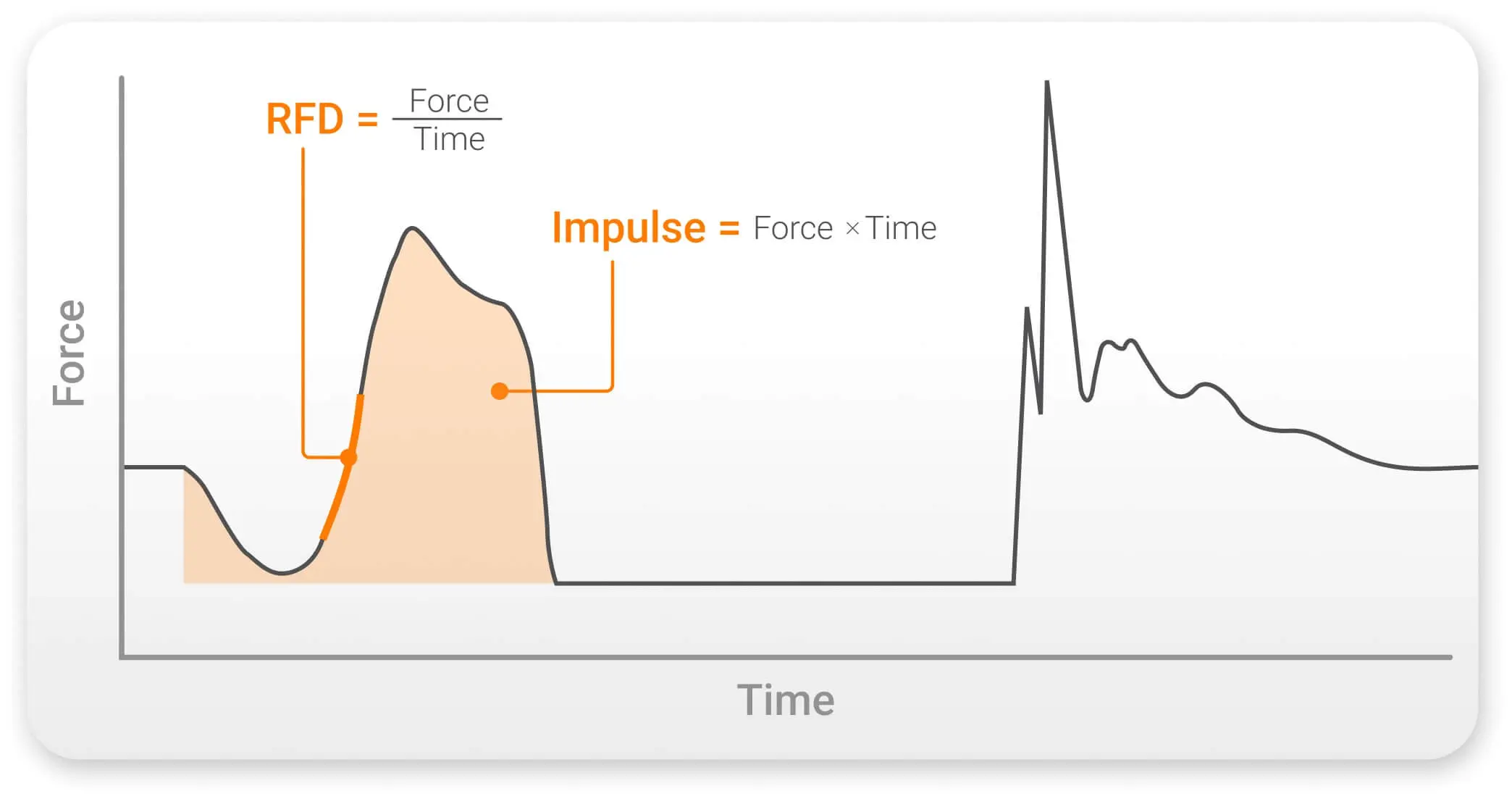

Diving deeper, I look at the relationships between force and time. These can be seen in metrics such as impulse and rate of force development (RFD).

RFD and impulse visualized in a CMJ force trace.

Rather than searching for a single magic metric, creating simple, repeatable testing environments allows tools like ForceDecks to reveal deeper performance insights. Practitioners can keep assessments straightforward and let the technology handle complexity.

Identifying these implementation strategies and building them into a broader system can help teams better integrate technology into their work environment.

Identifying these implementation strategies and building them into a broader system can help teams better integrate technology into their work environment.

I frame my test selection by starting with the question I’m trying to answer, not the test itself. Instead of asking “what’s the best test?”, I identify the specific athletic qualities I need to evaluate and the adaptations that underpin those qualities.

Once the target adaptation is clear, I choose the test that best reflects the force-time characteristics I’m interested in.

High-force, high-speed actions, such as sprinting or plyometrics, lend themselves to different assessments than high-force, low-speed actions like isometrics, eccentrics and isotonics. Creating this alignment makes testing more intentional and directly tied to what actually matters in the sport.

How can practitioners identify which metrics are worth pursuing?

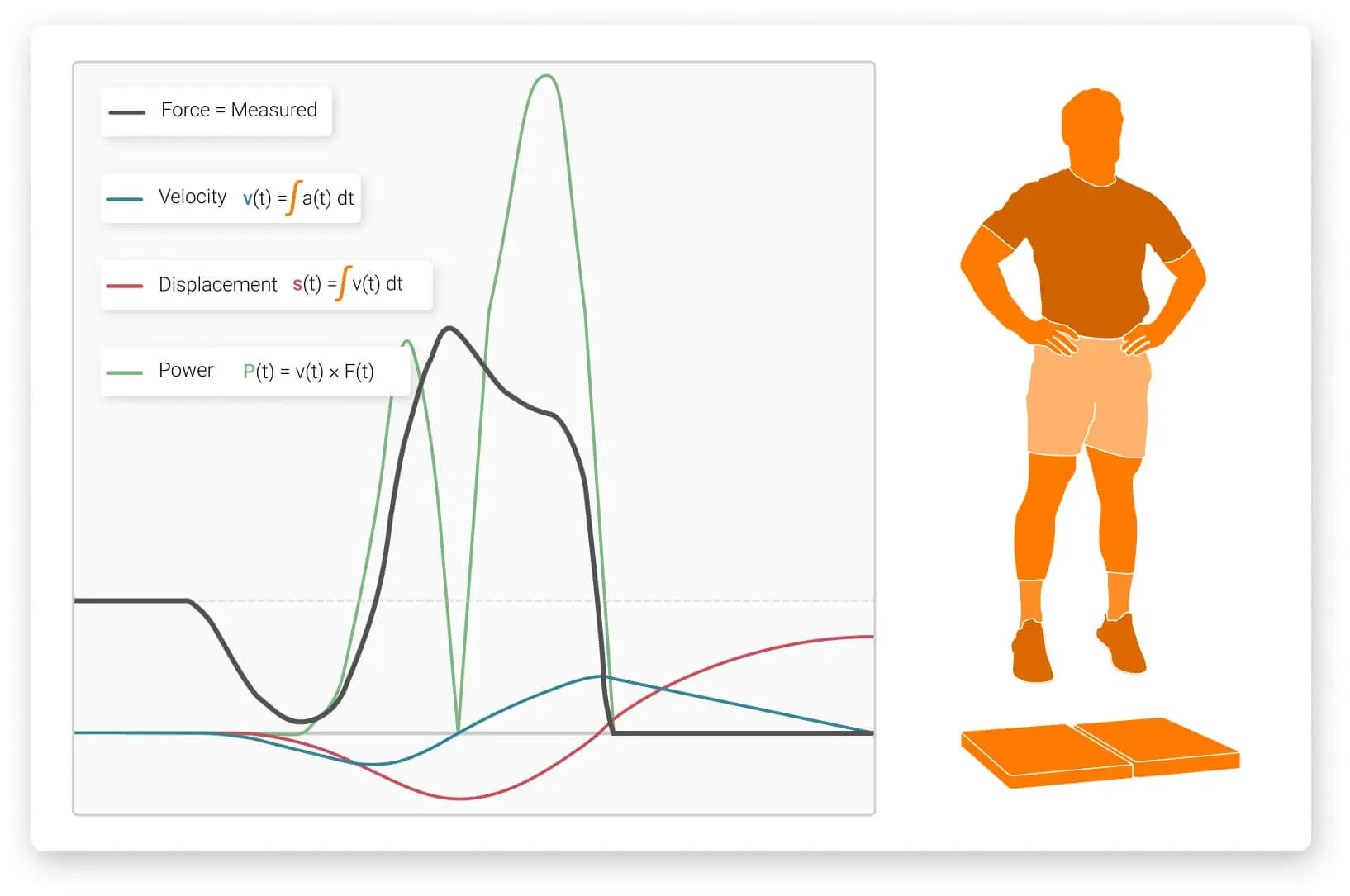

Start by understanding the relationship between what ForceDecks directly measures (force and time) and the additional variables derived from those signals. Calculated metrics such as velocity, displacement and power aren’t lesser; they simply represent different layers of interpretation built from the raw data. Knowing this helps practitioners match each metric to the physical quality they want to assess.

Athlete performing a CMJ with basic calculations and visualizations of metrics.

A practical first step is pairing metrics with assessments. Using the drop jump example from before, there may be instances when metrics like drop landing peak force are helpful (e.g., an asymmetry measure in a rehabilitation context). However, most often drop jump measures will be most appropriate to monitor measures of reactive strength or rapid strength, such as the following:

- Reactive strength index (RSI)

- Jump height

- Contact time

- Peak power / body mass

- Peak eccentric power

- Peak concentric power

Context will always influence metric selection, but aligning test and metric selection frameworks can be simple and meaningful for practitioners.

Context will always influence metric selection, but aligning test and metric selection frameworks can be simple and meaningful for practitioners.

For those who want to learn and apply these methods more effectively, VALD Resources provides clear frameworks for test selection, key metrics and interpretations through Practitioner’s Guides, Knowledge Base articles and research summaries.

Ultimately, clarity in systems and processes matters most. A consistent framework for choosing tests, understanding the qualities they target and recognizing meaningful change allows practitioners to focus on the metrics that truly inform decisions – leading to more confident, accurate and athlete-centered outcomes.

What’s the biggest misconception you encounter?

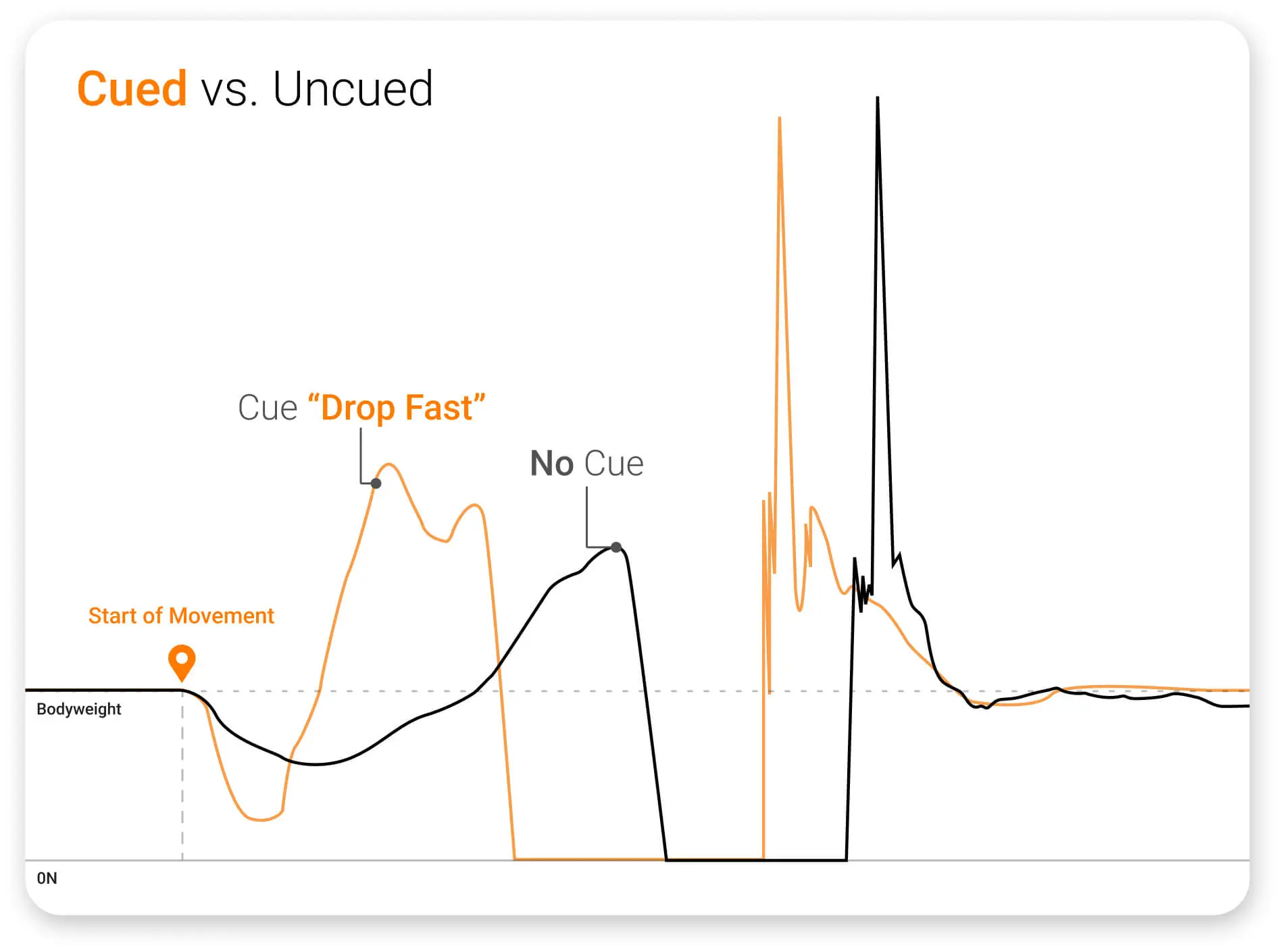

One is the relationship between cueing, strategy and performance outcomes. Cueing influences mechanical strategy, which can impact test performance. Similar to exercise execution, how practitioners cue athletes during assessments can influence outcomes.

By asking an athlete to jump “deep and fast,” “deep and slow,” “shallow and fast” or “shallow and slow,” outputs like jump height or power may stay relatively stable. However, countermovement depth, eccentric peak velocity and timing characteristics can shift substantially. The same is true for drop jumps, where landing strategy can change RSI from 2.5 to 1.5 in the same athlete.

Two force traces for a CMJ with different cues showing different results.

Words affect an athlete’s strategy, which determines their movement patterns and ultimately drives performance outcomes. If we make decisions based solely on the final outcome while ignoring cueing, strategy and movement quality, we miss critical information.

If we make decisions based solely on the final outcome while ignoring cueing, strategy and movement quality, we miss critical information.

How can practitioners draw more from data?

Modern testing is most powerful when simple systems align with clear intent. By pairing purposeful assessments with meaningful interpretation, practitioners can turn objective data into practical, repeatable and athlete-centered decision-making.

To learn more about how ForceDecks can support repeatable testing, guide metric selection and match assessments to the adaptations you want to measure, get in touch with our team.