A Framework for Choosing the Right Tests and Measures

Available in:

EN

About the Author

Lachlan James, PhD, is an Associate Professor of Sport Science at La Trobe University and the Founder of ForceLab Strength Science Solutions. With over 60 peer-reviewed publications, he has a focus in strength science, in particular strength diagnostics and training adaptations.

Lachlan currently supervises nine PhD students and oversees strength science projects across AFL and NRL clubs, as well as at institutes of sport including the Queensland Academy of Sport and the Victorian Institute of Sport.

Introduction

Physical performance testing (e.g., assessing strength characteristics, speed qualities and endurance) has never been more accessible, yet navigating test and metric selection can be deceptively complex.

This blog expands on insights from a recently published peer-reviewed article written by Lachlan (James et al., 2024), offering a distinct, practitioner-focused perspective.

In this article, Lachlan proposes a structured framework specifically designed to help sport scientists and coaches select tests that objectively support competition performance, link key metrics to meaningful outcomes, align assessments with team objectives and ultimately make smarter, evidence-informed decisions.

Why Testing Needs a Rethink

Performance testing helps identify physical qualities, diagnose key issues and guide training toward areas of greatest need. High-quality tools and software such as the VALD suite are now embedded in daily workflows across competitive sport.

However, with greater access to data comes a new challenge: data without direction. Testing reports are often comprehensive but lack clear intent. With hundreds of available metrics, determining what matters can be overwhelming. Worse, what is reported is not always what provides the most meaningful insight.

A common challenge is collecting data without turning it into actionable decisions.

This disconnect results in organizations collecting more data – but struggling to translate it into training decisions that improve performance.

…the problem is not the technology itself, but rather how it is integrated within the principles and training systems we implement as practitioners.

In this case, the problem is not the technology itself, but rather how it is integrated within the principles and training systems we implement as practitioners. The solution lies not in the technology, but in its application.

A practitioner and athlete reviewing data collection results specific to soccer speed testing.

Making Data Count

Technology alone does not guarantee better outcomes. Without a deliberate framework, even high-quality data becomes noise, leaving practitioners asking:

- Which metrics matter?

- Which are redundant?

- Which justify our time, attention and resources?

Without a deliberate framework, even high-quality data becomes noise…

Force plate systems such as ForceDecks generate hundreds of metrics and normative comparisons in real time, but the value depends on how strategically they are applied within a performance model.

A structured approach, grounded in modern validity theory, helps ensure relevance and clarity through two steps:

- Building a performance model that links measures to competition outcomes

- Evaluating each link critically from technical, decision-making and organizational perspectives

This framework helps practitioners ensure that measurements are not just valid and reliable, but also interpretable, actionable and performance-aligned.

Building a Performance Model

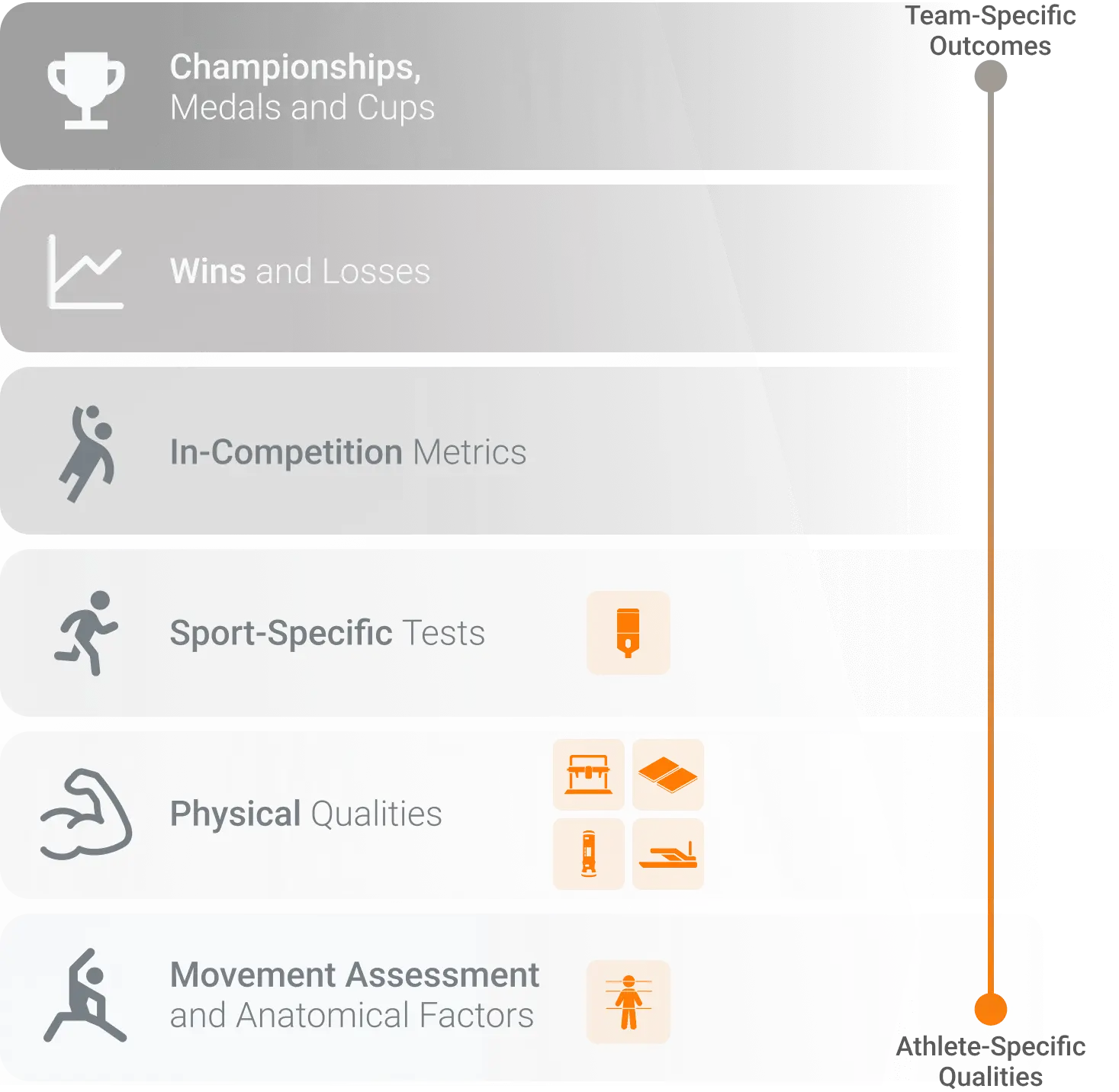

For metrics to matter – whether it is successful tackles or field goal percentage – they must yield meaningful insight into competition performance or its key components. However, physical performance, whether it be sport-specific or gym-related, is contextual, and in most cases, the link between a test and competitive success is indirect, layered and multifactorial.

…in most cases, the link between a test and competitive success is indirect, layered and multifactorial.

This is especially true in complex sports such as field, court and combat sports, where outcomes are shaped by the interaction of technical, tactical, physical and psychological factors. Here, direct cause-and-effect between physical tests (e.g., Knee Iso-Push peak force) and outcomes (e.g., win-loss records) is rarely demonstrable.

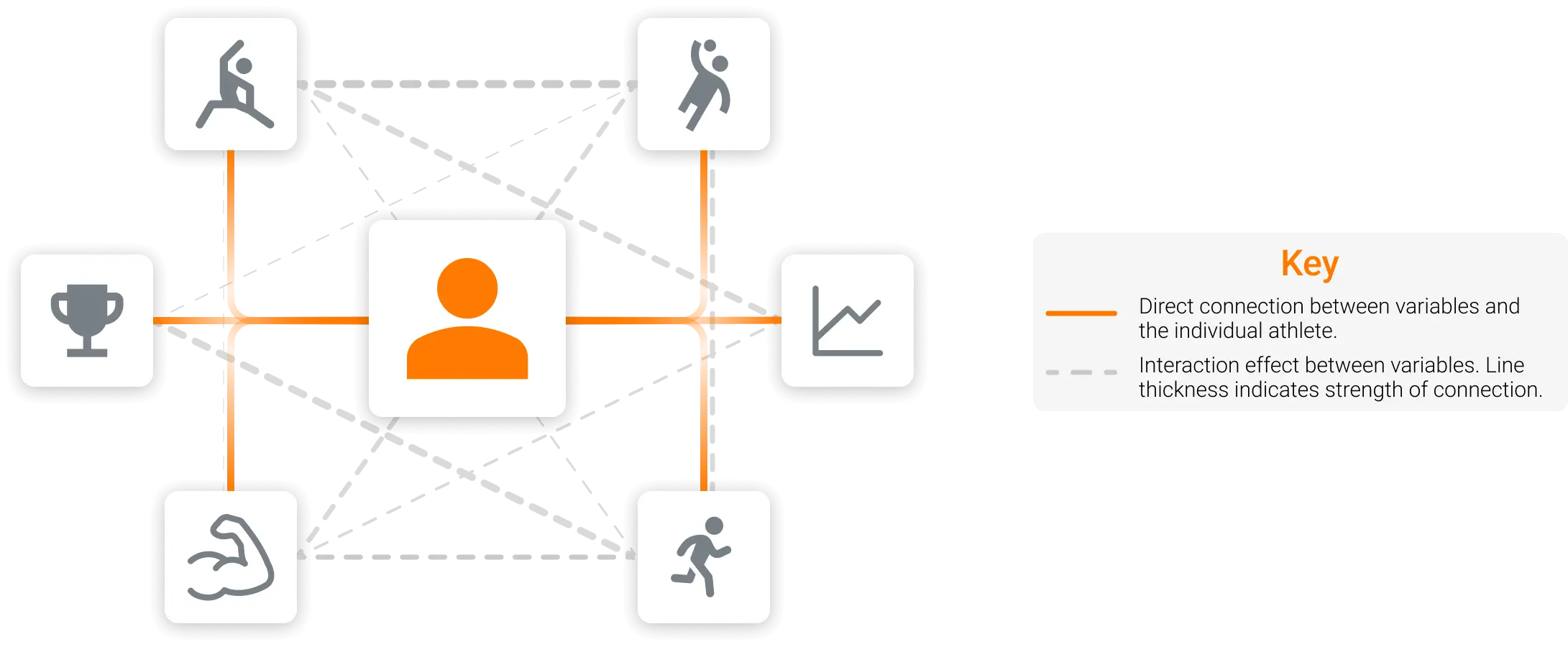

Web of determinants between an athlete (center) and the measurable layers of validity within the framework.

Recognizing these layers forms the foundation of a performance model – bridging the gap between objective testing and real-world success.

Performance Model Construction

To begin constructing a performance model, first identify the sport’s key performance indicators (KPIs), which are typically action variables capturing critical aspects of competition (e.g., successful tackles in rugby or field goal percentage in basketball).

KPIs are often directly linked to competition outcomes, shaped by the team’s specific game plan or designated by the head coach as priorities.

KPIs are often directly linked to competition outcomes, shaped by the team’s specific game plan or designated by the head coach as priorities.

Once KPIs are defined, the next step is to find supporting evidence linking them to relevant physical metrics. An alternate approach compares higher- and lower-tier athletes – like starters vs. bench players – to identify the physical metrics that best differentiate elite from sub-elite or selected from non-selected athletes.

In some cases, a sport-specific test may be available that serves as a conduit between the physical measure and the next level of the validity framework. Such tests typically isolate specific aspects of gameplay under controlled conditions and show strong associations with KPIs or higher vs. lower-tier distinctions. However, caution is warranted, as many so-called sport-specific tests lack evidence of a meaningful link to actual performance.

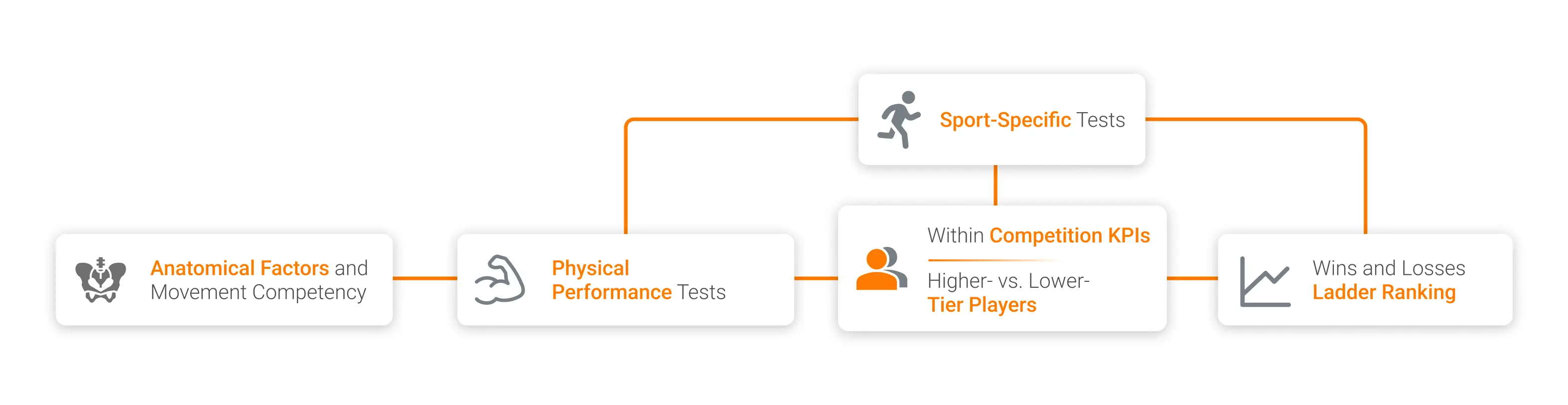

It is also important to recognize that physical test outcomes are influenced by underlying anatomical and neuromuscular factors. Muscle architecture, tendon properties, muscle size and intermuscular coordination represent a structural and foundational level of sport performance.

The relationship between different layers of the performance model.

Two examples illustrating the application of the performance model are outlined below:

Rugby: Line breaks or successful tackles may be identified as key performance indicators, either because they consistently correlate with victory when contextual factors are controlled or because they reflect critical elements of the team’s game model.

Among available physical performance measures, peak force from the isometric squat may show the strongest associations with these KPIs, supporting their inclusion in the performance testing battery.

Sample relationship between variables in the performance model for a rugby athlete.

Australian Football: One-versus-one (1 vs. 1) reactive agility tests may effectively discriminate between professional and semi-professional athletes, whereas a pre-planned change of direction test does not. In this scenario, the reactive agility test should be prioritized as the sport-specific test layer.

If evidence shows that reactive strength index (RSI), as measured by the drop jump (DJ) assessment on ForceDecks, is the only physical quality linked to agility performance, this supports the inclusion of DJ RSI in the testing system.

Sample relationship between variables in the performance model for an AFL athlete.

This layered approach acknowledges the complexity of sport while still enabling rigorous assessment to discern signal from noise. It helps practitioners justify their test choices on a clear logic chain – linking metrics to physical capacities, competition performance and, ultimately, outcomes.

Evaluating the Links

Once the performance model has been constructed, the next step is to systematically assess the strength and quality of each identified link.

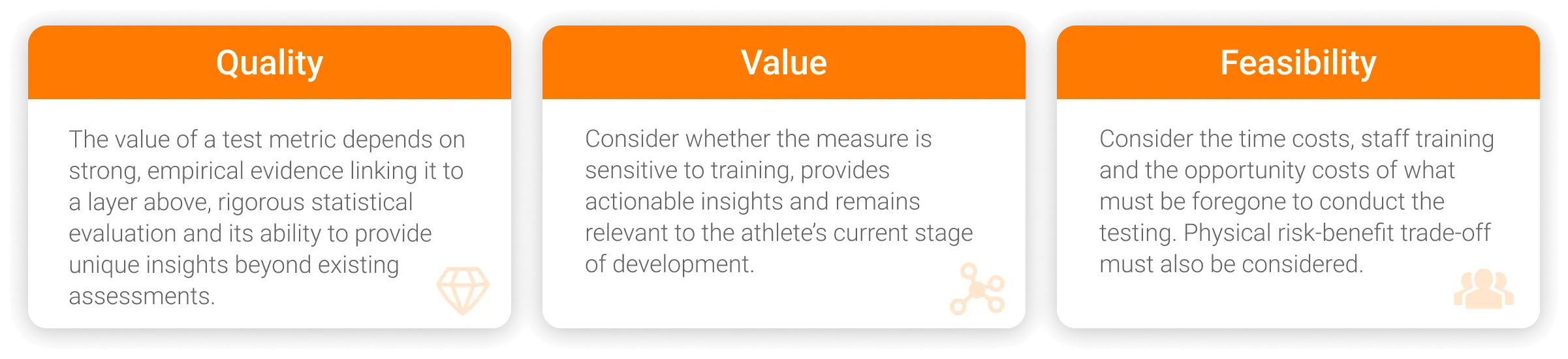

To achieve this, three practical filters are recommended, each addressing a distinct yet complementary component of validity: measurement quality, decision-making value and feasibility.

…these filters form a robust evaluation framework applicable across sports and training contexts.

Together, these filters form a robust evaluation framework applicable across sports and training contexts. For each link between layers, the following should be applied:

1. Measurement Quality

This first filter addresses the technical integrity of the measurement – often the most familiar aspect of measurement properties. Key considerations include:

- How well does the measure align with a gold standard (criterion validity)?

- Is it reliable, with acceptable measurement error?

Beyond technical quality, practitioners must also ask:

- Is there strong empirical, statistical and mechanistic support linking the metric to performance outcomes?

- Does the metric offer unique insight beyond existing assessments?

This level of scrutiny ensures that selected measures are both technically sound and practically meaningful.

2. Decision-Making Utility

The second filter focuses on usefulness: does the data inform decision-making?

Effective tests simplify complexity by providing actionable insights that guide interventions. To be useful, a test must also be responsive – able to detect meaningful changes over time in modifiable qualities.

Even valid, responsive tests can lose relevance if an athlete plateaus in that area. In such cases, shifting focus to new metrics may better support continued development. Key considerations include:

- Will this measure change with training?

- Is it actionable?

- Is it still relevant to the athlete’s stage of development?

If not, it may be time to reassess its role in the program.

3. Organizational Feasibility

Feasibility refers to an organization’s ability to implement testing and analysis efficiently and sustainably. Drawing from strategic planning and sport management principles, feasibility analysis weighs the costs – financial, time and opportunity – against potential benefits. This includes not only expenses like equipment and staffing, but also setup time, athlete fatigue and potential trade-offs in training time or assessment quality.

Even highly valid tests, like isokinetic dynamometry, may be impractical if their burden outweighs their value. In contrast, a simpler, low-cost test, such as a simple adduction/abduction assessment on ForceFrame, may be justified if it delivers useful insights with minimal disruption.

Applying the Physical Assessment Framework

The real advantage of the proposed physical assessment framework is its versatility. It is not designed as a rigid formula but as a flexible guide that can adapt across different sports and organizational settings.

At its broadest, it functions like a simple checklist comparison tool to help practitioners weigh up multiple performance measures they are considering for use. However, when applied at a granular level, users can tinker by adjusting the weight of different criteria, reflecting subtle changes in performance outcomes that influence sport performance and training decisions.

For instance, some performance measures might need to pass through a first stage of high-priority criteria before they are even shortlisted. A second stage might then explore potential weaknesses or constraints, even if not every box is ticked, before making final decisions on implementation.

…the proposed physical assessment framework…[is] a flexible guide that can adapt across different sports and organizational settings.

In essence, this framework provides a layered, strategic pathway that begins with establishing foundational validity, continues by refining the process to suit specific needs and ends by ensuring that selected metrics are not only evidence-based but also fit-for-purpose within the operational realities of the organization.

A multi-layer framework showing connections and examples between layers.

Framework Iterations: Define, Evaluate and Discover

Technology has expanded what is possible in athlete assessment. VALD systems make it easy to capture high-quality data and integrate it across a wide range of settings. However, the true value of these tools depends on how well they are embedded in the decision-making process and how they link to performance outcomes.

The framework outlined guides practitioners through that process. By building a performance model and evaluating tests through technical, decision-making and organizational lenses, practitioners ensure that every measurement is both meaningful and actionable.

Testing should highlight the athlete’s key physical qualities in a way that fits the practitioner’s environment. Not every metric needs to be perfect – but it must serve a clear purpose. When applied well, testing simplifies decisions and becomes a competitive advantage.

For teams focused on making better decisions, this approach offers a practical path: define what matters, evaluate what you measure and turn data into performance insight.

If you would like to learn how VALD’s human measurement technology can support smarter test selection, align physical metrics with performance outcomes and turn data into actionable insight, get in touch with our team today. If you have questions about the framework itself, contact Lachlan here.

References

- James, L. P., Haycraft, J. A. Z., Carey, D. L., & Robertson, S. J. (2024). A framework for test measurement selection in athlete physical preparation. Frontiers in Sports and Active Living, 6. https://doi.org/10.3389/fspor.2024.1406997